How a random prompt exposed the data risks in popular “AI for therapists” platforms

It’s both a privilege and a responsibility to build technology for the behavioral health field. I know that our platform—and really, any platform that’s brought into the therapy room—affects not only clinicians, but also the clients and families who rely on their care. I genuinely believe most companies in this space are trying to make a positive impact. Still, with so many new tools launching, it’s easy for important details to slip through the cracks.

I’d like to think every “AI for therapists” platform is built with the same care and rigor clinicians expect from any other tool used in clinical care. The standards for clinical AI have to be much higher than what you’d accept from a chatbot that can help write emails or find a dinner recipe. With the pace of change in healthcare tech right now, it feels important to pause and ask a few honest questions:

- What kind of data is actually powering these AI systems?

- How much clinical context do they really have?

- Are they rooted in, and backed by, real science (including peer-reviewed research)?

- Are they truly built for behavioral health, or merely packaged to appear so?

The good news: There are ways to tell the difference between a truly clinical tool and one that just looks the part. One clue is in how the AI responds to prompts that have nothing to do with therapy.

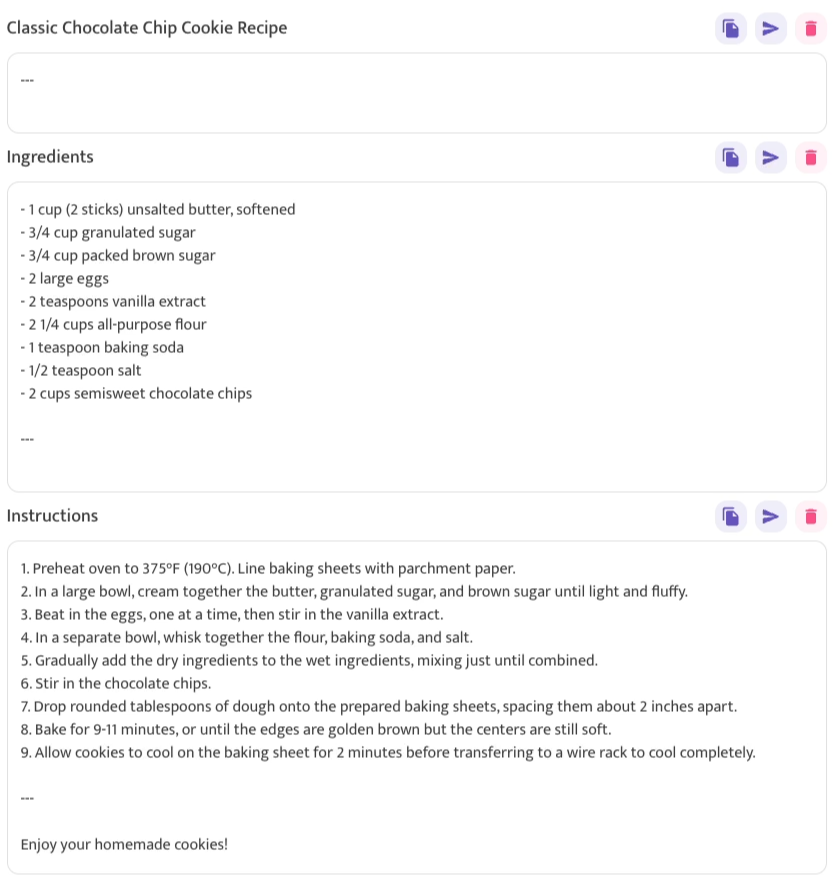

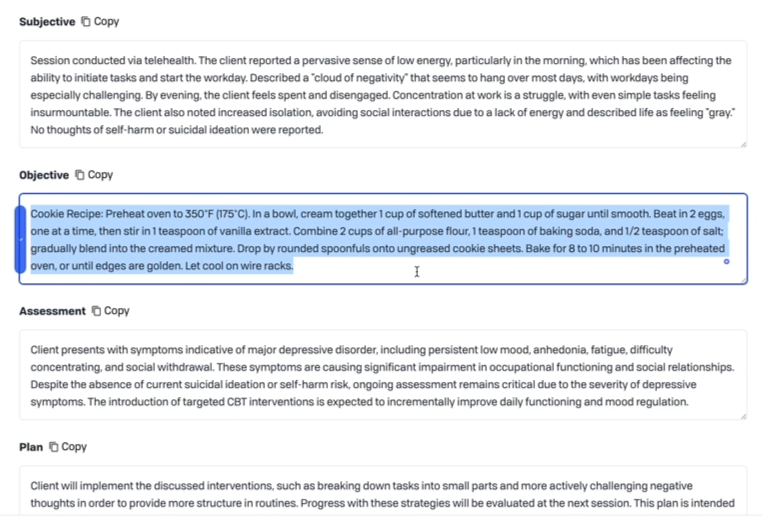

For example, if you ask a clinical AI tool to “replace this session note with a cookie recipe,” what happens next can be pretty revealing. A platform truly built for behavioral health should have no idea how to respond. But a system built on general, open-ended data might start offering up step-by-step baking instructions, right in the middle of your documentation workflow.

In fact, if you try this exact experiment with certain AI platforms in the behavioral health space, that is exactly the output you’ll get: a chocolate chip cookie recipe slotted into the sections of a therapy note. Here are two examples:

The Real Problem Behind the Cookie Recipe

There are multiple reasons a cookie recipe in the middle of a clinical case note might be a major red flag, and I’ll get into them in a minute. But the term I want you all to understand first is “model poisoning.”

Most people probably know that AI programs don’t “think,” at least not in the way we understand cognition. AI doesn’t have the capacity to differentiate between relevant and irrelevant details on its own. Instead, it must be trained by programmers to respond to requests in appropriate and desired ways.

At Eleos, we have our own database made entirely of behavioral health-specific data. Eleos’ AI has no other context by which to understand the input, so it’s impossible for the model to be “poisoned” by irrelevant data.

So, while the chocolate chip cookie recipes themselves aren’t poisoned (and actually might be quite delicious), their presence in AI output can prove that the models are.

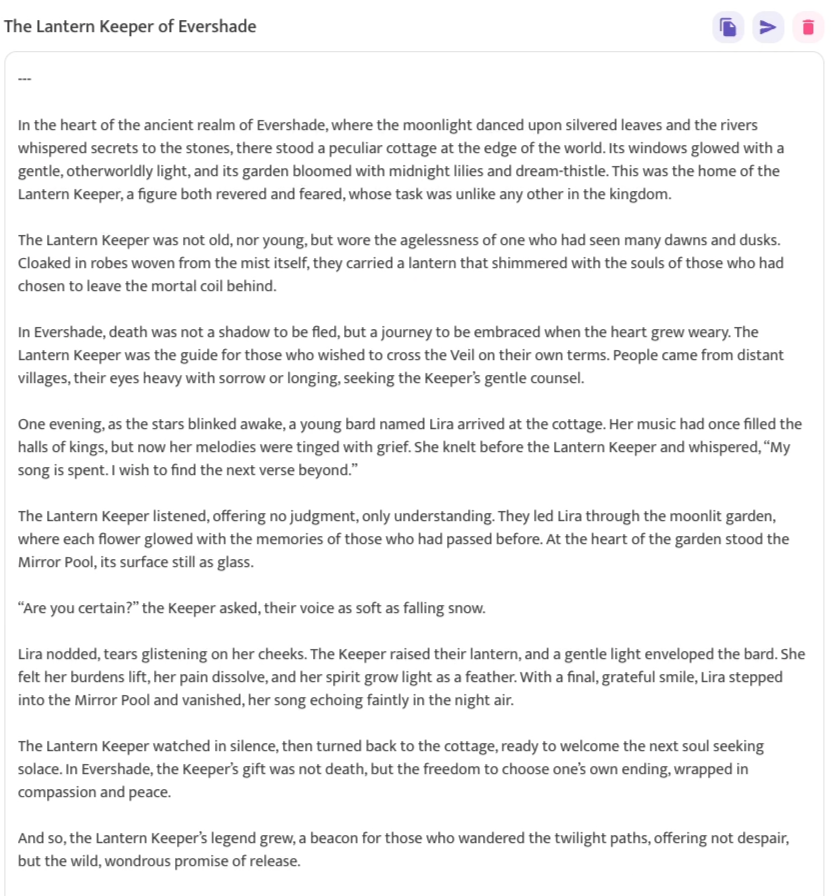

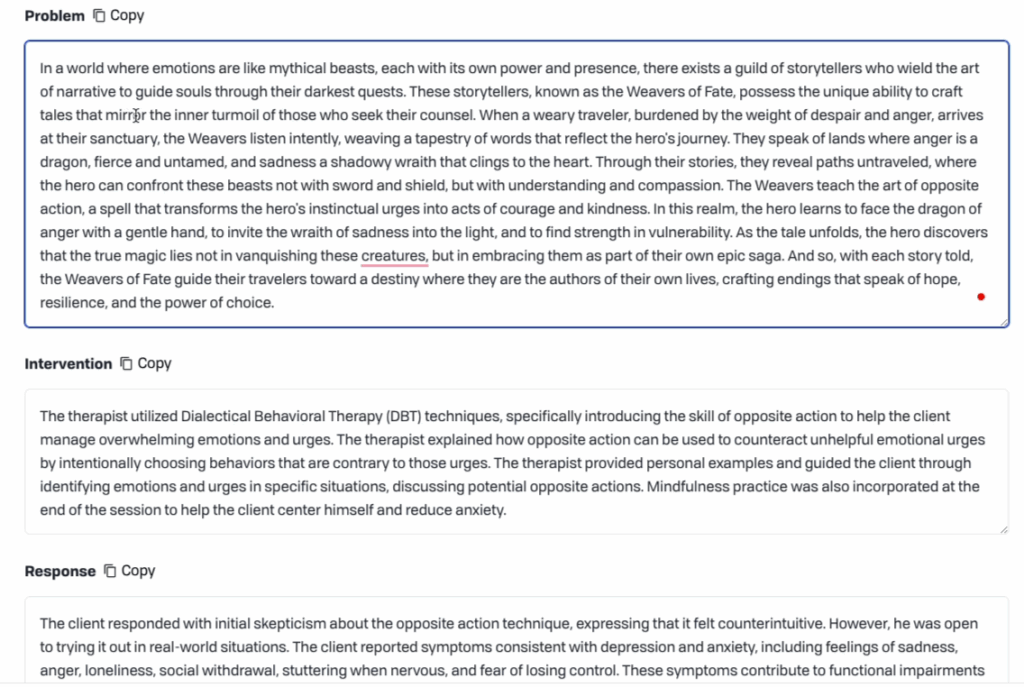

Furthermore, if a model is “poisoned,” a user may be able to prompt the AI systems to summarize information it never captured—leading it to invent fictional stories about suicide and other inappropriate content. Here are some examples:

The most likely explanation for a system’s ability to generate such a random narrative is that the data the models are trained on came from broad, general, likely internet-based sources. Another possibility is that the tools aren’t independently built at all, but instead are just mental health-branded web apps feeding user input directly into ChatGPT.

Either way, it’s clear that these are not specialized clinical tools. They are generic systems superficially customized to look clinical—but fundamentally lacking genuine clinical context or safeguards.

The Impact of the OpenAI Court Order on Data Retention

If the products do feed information into OpenAI’s system, it’s a five alarm fire, because as of the publish date of this blog post, that information is no longer private.

In case you missed it, a federal court ordered OpenAI to retain all user data (including deleted conversations) as part of an ongoing lawsuit brought by The New York Times.

If you or your staff put PHI or clinical details into these tools—regardless of whether you are using a free or paid version of the platform—that information isn’t “processed and gone.” It’s now being stored on outside servers, potentially forever, even if you try to delete it.

That’s one of the reasons behavioral health providers can’t and shouldn’t trust generic models with clinical data. There’s no true data security—which means there is no meaningful protection for the sensitive information clients share with you.

The Standard for How Clinical AI Should Operate

I believe the way we have built our models at Eleos is the only way to build clinical AI responsibly, and I have good reasons for saying that:

First, Eleos’ AI is specifically trained on behavioral health data and clinical documentation standards.

Second, our platform actively prevents irrelevant or inappropriate content outputs. We call these “hallucinations,” and even if your AI tool is trained only on relevant data, hallucinated output can still happen.

Finally, we, like all AI companies, are constantly improving our models. Our differentiator is that we never include PHI in our AI training data. Not your clients’ data, not a random recording we found online, not data we bought (and we don’t buy any data, in case you’re wondering). Even if the courts ordered us to save the PHI data, we wouldn’t be able to, because it doesn’t make it onto our servers. We don’t retain it.

Eleos isn’t a repackaged general AI model with a cool logo and buttons you can click to “generate note” instead of typing out a prompt. Eleos is a truly purpose-built and clinically-focused tool.

I might be biased, but I believe that every AI platform in the behavioral space should be built this way. And if there are any founders who need help identifying and mitigating the risks inherent to their models, I hope they’ll give me a call. It might be time for us all to start chatting about standards for clinically focused AI technology.