Over three packed days in Philadelphia, NatCon25 reaffirmed what many of us sensed a year ago: artificial intelligence is no longer a “pilot project”—it’s a core utility for community behavioral health and SUD organizations. AI-focused sessions were standing‑room‑only, hallway chatter revolved around risk questionnaires, and the biggest applause lines emphasized tools that hand clinicians back precious minutes with their clients.

Below is a deeper dive into the biggest AI themes I observed during the conference: the preeminence of security, the emerging compliance opportunity, and the new generation of documentation workflows (with a few related reflections on Eleos’ Spring Launch Event thrown in for good measure).

From Prediction to Reality: AI Enters the Mainstream

In last year’s NatCon recap, I wrote that “AI will leap from the therapy room to the back office,” and that wider adoption would follow . Twelve months later, the numbers speak for themselves:

- 80 % of US hospitals now use some form of AI in clinical or operational workflows.

- 67 % of AI decision‑makers plan to increase their generative AI investment in the next year.

In other words, provider organizations no longer view AI as a shiny new tool to demo, but as a core enterprise application. I would advise those of us who develop such applications to do the same. More on that below.

When AI Goes Mainstream, Security Becomes Mission‑Critical

NatCon25 devoted multiple learning tracks to AI governance and security, including a standing room-only session on model ethics and data protection.

“Your clinicians and direct line staff are looking for ways to solve problems and ways to spend more time with people, because that’s what they went to school for. [So we thought], how could we bring this forward in a way that matches our values and feels good to the people who are doing the work and who want to have some innovative solutions?” – Dixie Casford, CAO, Clinica Health and Wellness, during our NatCon25 session on AI governance and policy

My takeaway? When vetting potential AI solutions, there are three critical risk layers to consider:

1. Data Security: Where Does Your Data Sleep at Night?

If you’re shopping around for an AI solution, it’s important to zero in on what data the platform actually stores. Most vendors still store full audio recordings and text transcripts, creating a treasure trove of sensitive PHI data for attackers—and a never-ending compliance headache. Even if a vendor claims to anonymize data, this process is never 100% effective from a statistical perspective.

Eleos takes a different tack: our multimodal pipeline extracts only the key moments providers need to finalize their documentation and discards raw text, so PHI never lingers in plain language. That design helped us earn HIPAA, SOC 2, and ISO 27001 certification—in addition to pursuing the new ISO 42001 AI management standard.

2. Model Security and Diversity: Is Your AI Trustworthy by Design?

Once you know your data is safe—and that you don’t have full session recordings, transcripts, or PHI roaming around somewhere—then, as a sophisticated AI buyer, you must ask: what happens in the model layers? How can you be certain the models are not biased? Are you sure this tool isn’t just a wrapper around ChatGPT?

Don’t be fooled: AI bias can’t be fixed by a single audit stamp. It’s mitigated through diverse training data and transparent evaluation. Eleos is built on the largest known dataset of real behavioral‑health sessions—millions of minutes across a wide range of demographics—so our models start from a richer baseline. We back that up with our independent TRUST certification and our unique bias‑proofing framework. We’re also one of the only healthcare AI companies with an entire team of in-house, PhD-level data scientists and practicing behavioral health providers to oversee model performance.

Building an enterprise-level AI product means you deeply understand your customers’ workflows and needs, especially from an IT standpoint. That’s the true difference between full-blown clinical AI suites and low-grade ChatGPT wrappers, which can easily be hacked or manipulated, putting your organization in a similar state of vulnerability as you might be in if your clinicians pasted PHI directly into ChatGPT. Below are real examples (from products out there in the market today) of how these less-secure solutions can be easily manipulated. Some of them might seem funny (like a clinical AI tool generating a cake recipe), but the implications are no laughing matter.

In the example above, a clinical AI tool has been prompted to come up with a cookie recipe. If your tool pulls content like this into a progress note, what other security risks may be present? A clinical AI tool allowing full access to its AI model with minimal security protection is a major red flag for security vulnerability. This lack of control means unauthorized individuals could directly interact with the underlying AI model.

That access opens the door to what is known as “model poisoning.” An attacker could intentionally feed the model misleading or malicious data. Over time, this can corrupt the model’s training and cause it to generate inaccurate, biased, or even harmful outputs. If this corrupted model is used in clinical decision-making or documentation, it could lead to errors in care.

Furthermore, a lack of security around model access can make the system vulnerable to data extraction or manipulation. If the AI model has access to PHI, a poorly secured entry point could allow attackers to bypass other security measures and potentially gain unauthorized access to sensitive client data. This could lead to severe privacy breaches and regulatory violations.

In essence, inadequate security at the AI model level creates a direct pathway for malicious actors to interfere with the core intelligence of the system—potentially compromising the confidentiality, integrity, and availability of PHI.

Above is a great example of how a user can “poison” the model, leading to hallucinations that could potentially find their way into the patient’s chart.

3 . Application Security: How Does the AI Talk to the EHR?

Even the safest model can be undone by a leaky integration. So, the third and final layer of security has to do with the way the AI product interacts with the system of record.

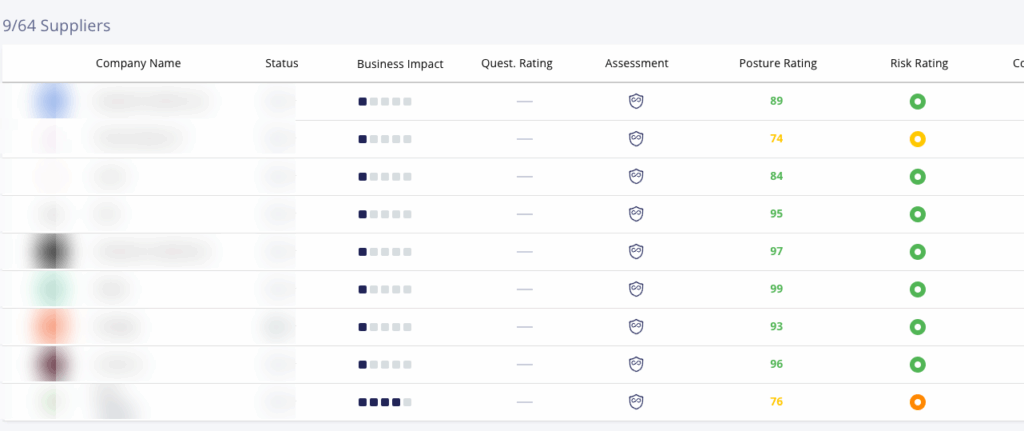

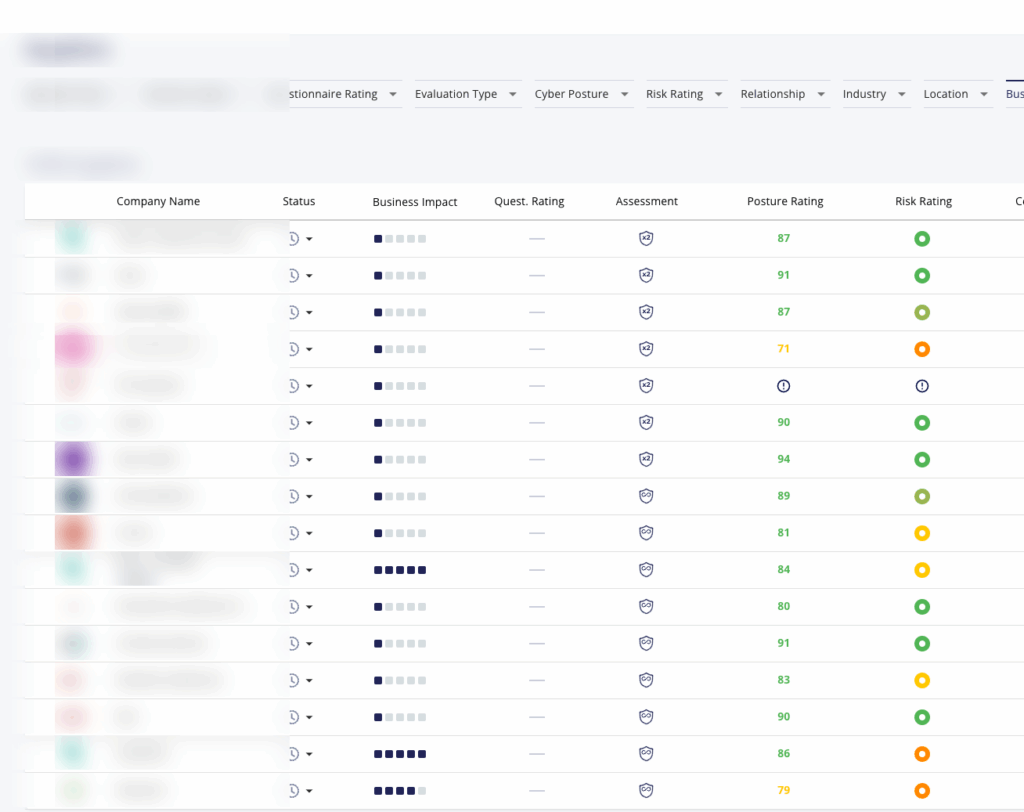

Here, third‑party rating tools like Panorays (shown below) give buyers an external view of vendor posture. Their scoring rubric puts a 99/100 company in the top percentile for cyber hygiene (Eleos’ score is 98).

As far as I know (and based on the many conversations I had at NatCon25), Eleos is the only behavioral‑health AI vendor with a full‑time CISO and dedicated in-house security team—further proof that we treat the application layer as production‑grade infrastructure, not a side project.

Think of legacy system-of-record data as boxes neatly stacked in a warehouse. AI pipelines, by contrast, are more like a fast‑moving river of audio and embeddings. Warehouses need locks; rivers need levees, sensors, and real‑time monitoring. Protecting one doesn’t automatically protect the other.

Compared to legacy healthcare tech platforms, building a secure AI solution at scale requires a completely different level of security and internal knowledge. This simply cannot be outsourced to a third party, no matter how well-known it is. It is core to the product and the vendor’s security culture—and it cannot be overlooked.

The Future of AI Brings Excitement, Optimism, and a Hint of Anxiety

Leaders I spoke with were energized by AI’s ability to refocus clinicians on care. Yet many whispered concerns about the current administration’s intensifying focus on eliminating fraud, waste, and abuse (FWA). For them, tools that can surface missing elements in a note or flag anomalous billing patterns are starting to be treated less as “nice to have” and more as necessary insurance against retroactive clawbacks.

AI‑driven compliance solutions like the one Eleos released in our Winter Launch Event can flag documentation gaps before auditors do, turning fear into proactive quality management. Organizations that ignore AI will start the next regulatory cycle at a disadvantage.

With NatCon25 Came Reunions, Partnerships, and a New Rallying Cry

Despite all the tech talk, the mood across NatCon25 remained mission‑driven. Executives from community mental health centers and SUD orgs spoke less about “productivity” gains than about freeing up more time for high-quality care. This was especially evident during the heavily buzzed-about preview screening of the Eleos-sponsored documentary film, “Who Cares?” (Check out the trailer here and stay tuned for more details about the official premiere!)

For those of you who attended the screening, came to our learning workshops, or even just stopped by the booth, thank you. I think I speak for the entire Eleos team when I say that nothing beats catching up with our customers and partners face‑to‑face; your stories fuel everything we build.

And a special thank-you to all the guests at our dinner event at Fels Planetarium. In addition to amazing food and music, live entertainment from a caricature artist, and a general air of camaraderie, this event gave us a galaxy-sized stage to unveil our latest upgrade to the Eleos Documentation product—very on-point for the “out of this world” theme we carried through the conference.

The new Documentation experience is a direct reflection of feedback from the 150-plus organizations we serve—an answer to their collective request to put more control into the hands of human providers, give them even more of their precious time back, and equip them with a lightweight solution that is even easier to use than before. We’re actually hosting a virtual Spring Launch Event on June 5 to dive deeper into this new experience and chat with early adopters at Hillsides about the benefits they’ve seen so far—be sure to save your spot here!

Inspired by SAP’s famous tagline, we left Philadelphia with a new mantra:

“The best-run provider organizations run on Eleos.”

See you all at NatCon26. Until then, keep turning AI’s promise into everyday impact. As Felicia Jeffery, CEO of Gulf Coast Center, so eloquently stated during our AI governance workshop:

“Automate the ordinary, so you can innovate the extraordinary.”